Prediction

Top 50 versus Bottom 50

While we understand that a song’s lyrics are not generally causal to where a given song might land on the charts, we had a hunch that there might at least be a correlation between a song’s position and its lyric content that we could model, train, and ultimately do some prediction.

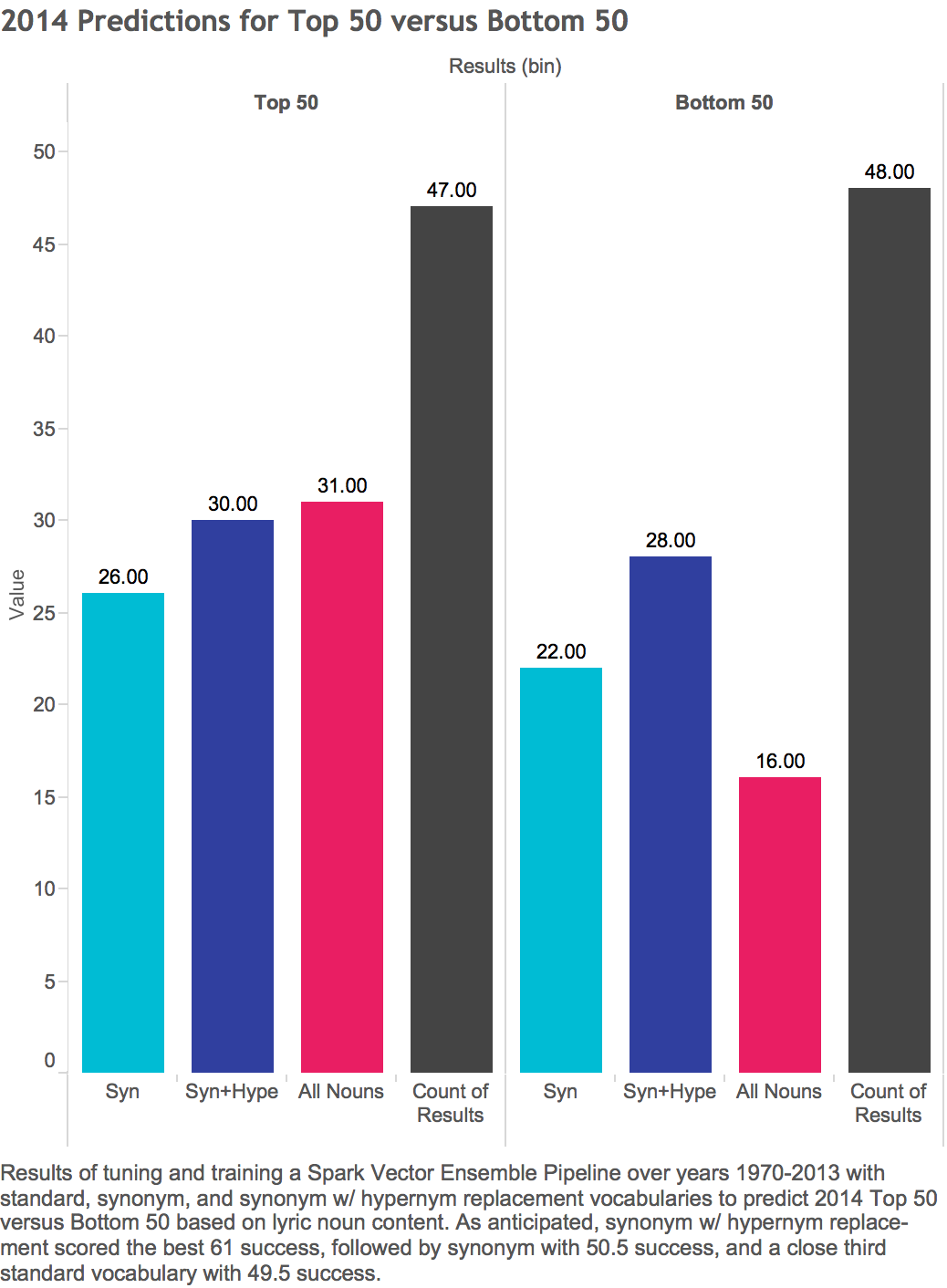

Having already explored Topic Modeling with Latent Factors as shown in the previous section, we wanted to separately focus on supervised learning techniques that would be more readily interpretable. Ultimately, we landed on predicting the ‘Top 50 versus Bottom 50’ positions on the 2014 charts, a balanced set of positives and negatives, also offering a binary prediction, where positives values indicate ‘In the Top 50’ and negative values indicate ‘In the Bottom 50’.

We also wanted to again leverage the cluster computing framework Spark as we had used previously in establishing the Vocabularies.

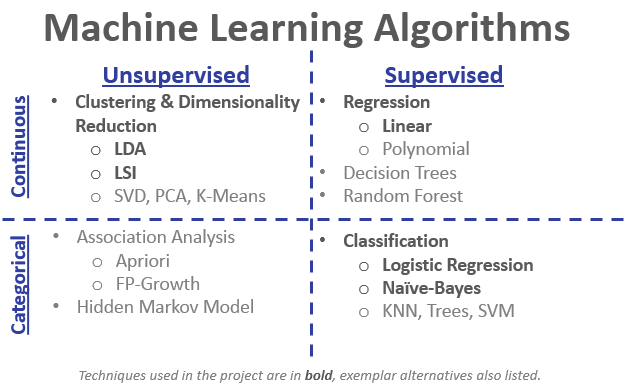

For prediction, we were interested in its Machine Learning APIs.

After some trial, error, and tuning, we used Spark’s Pipeline API to apply Logistic Regression learning algorithm, or estimator, which turned out to be a solid choice for this exploration as it favors binary, balanced data.

More in-depth information for our approach and results can be found at Vector Ensemble Notebook in addition to more cursory information in the project Master Process Notebook.

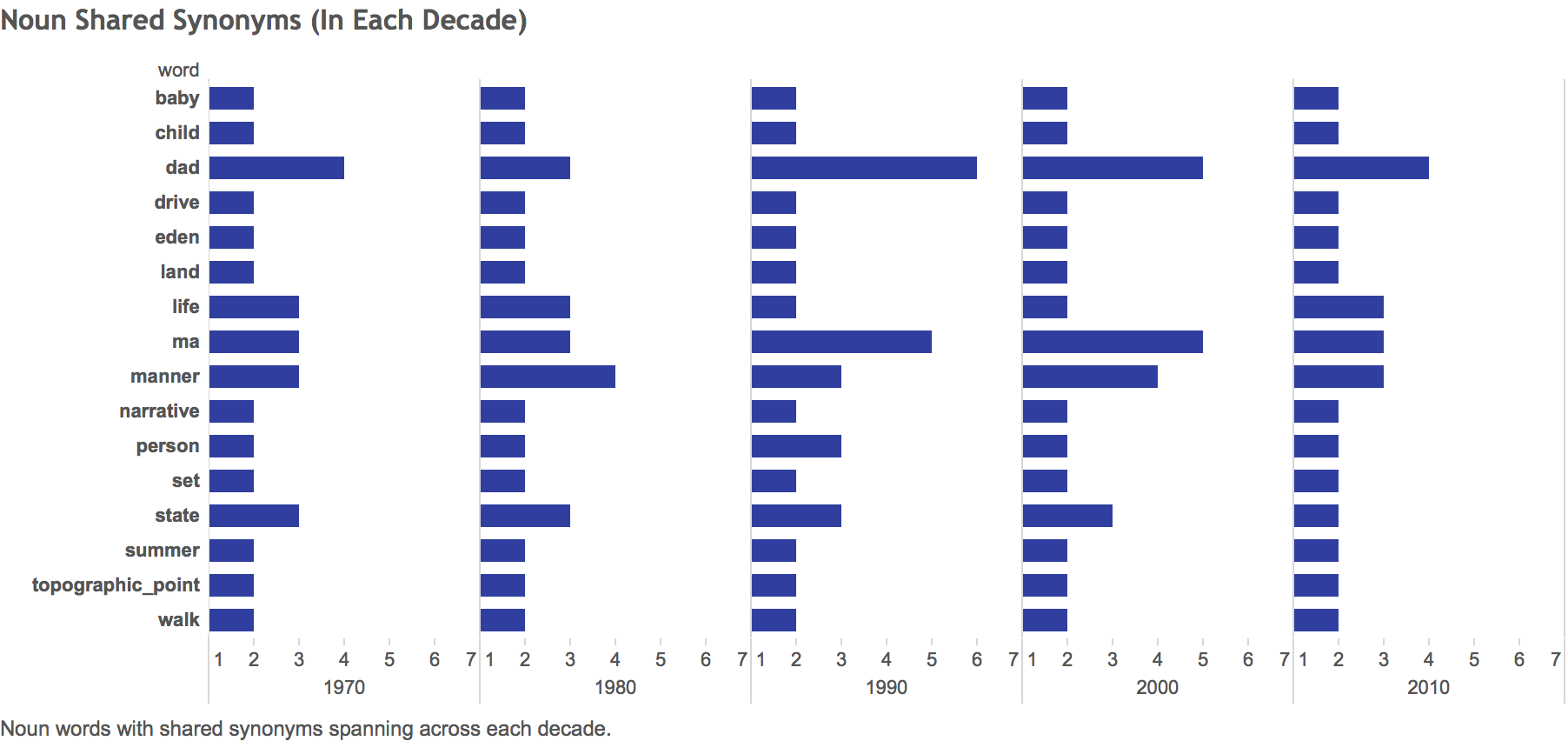

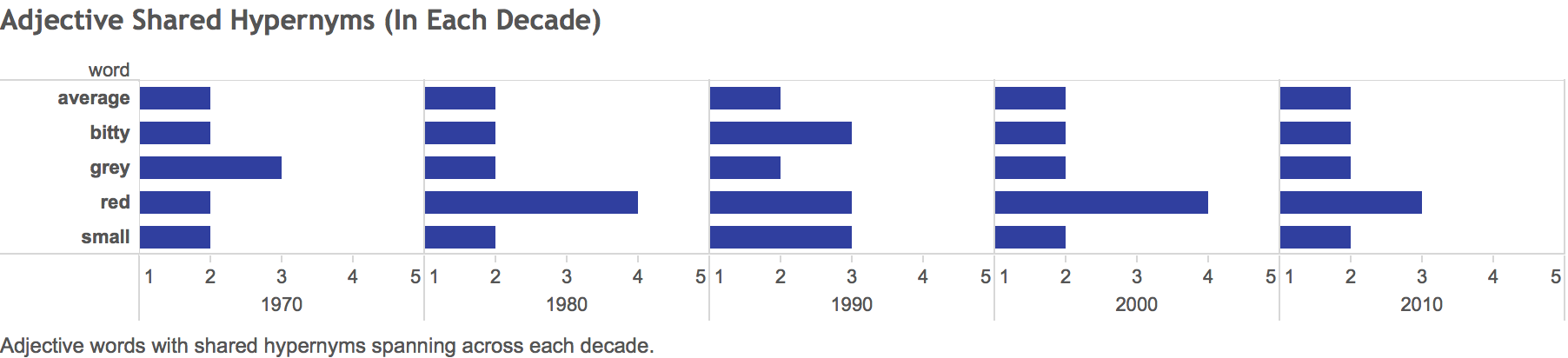

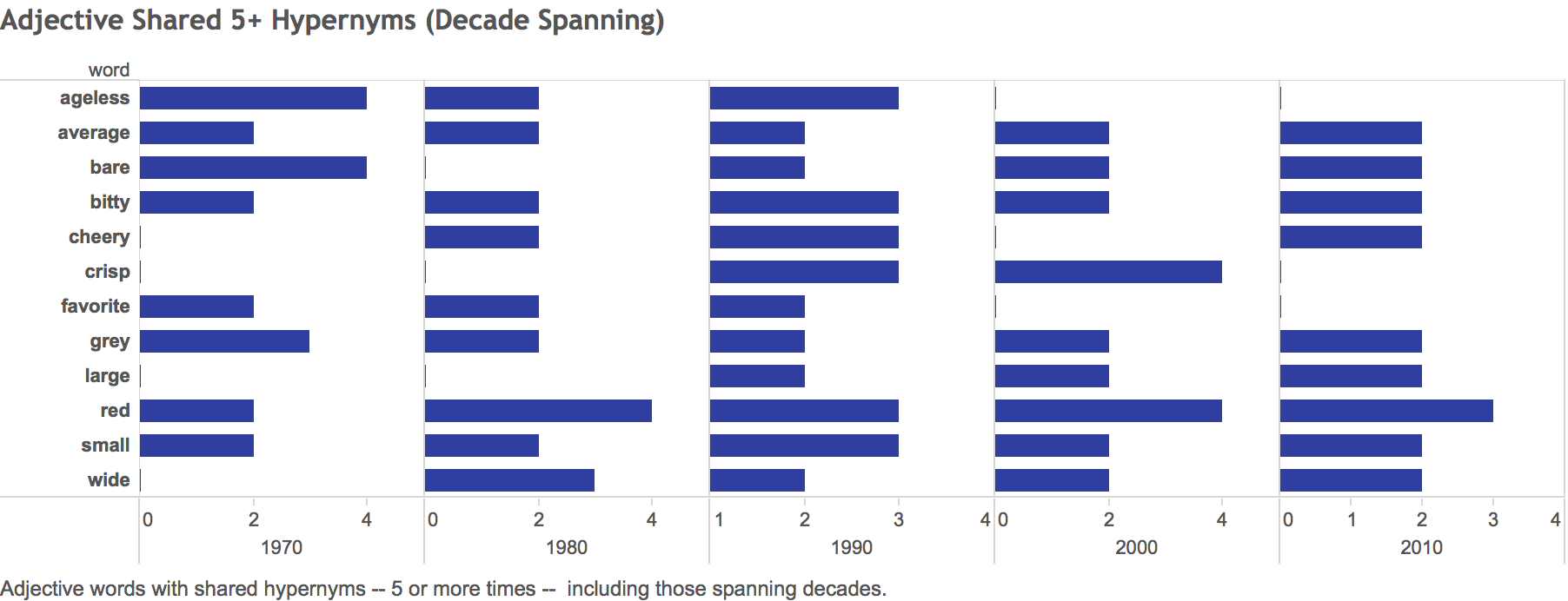

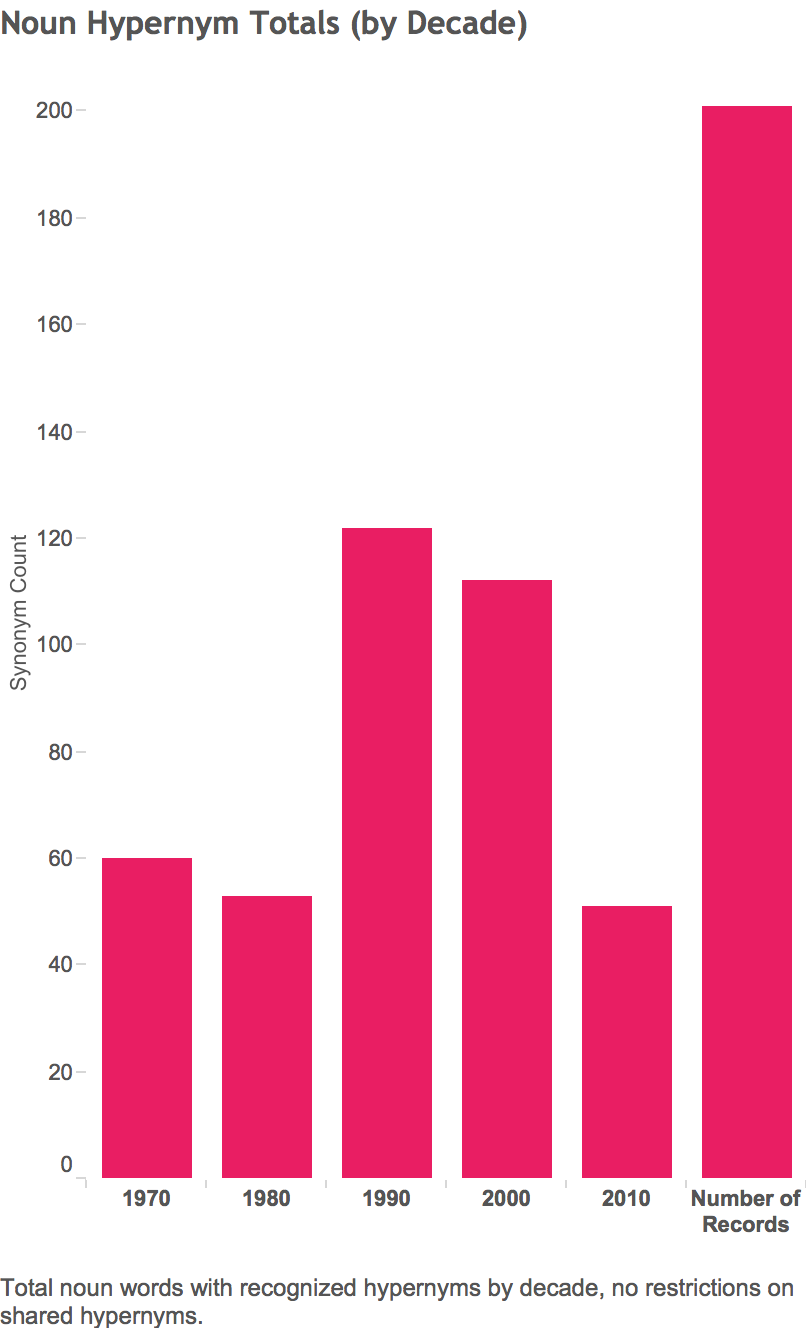

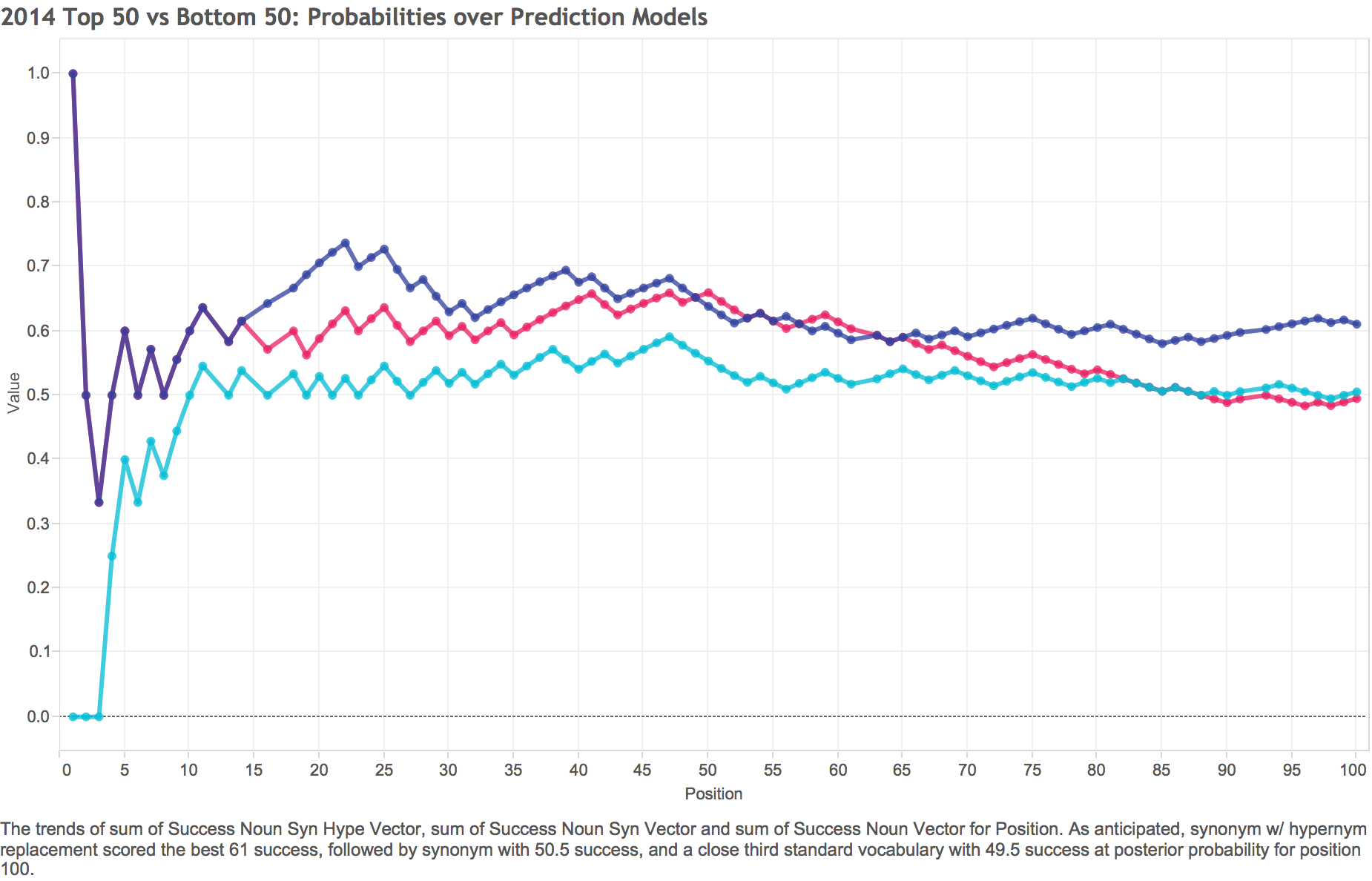

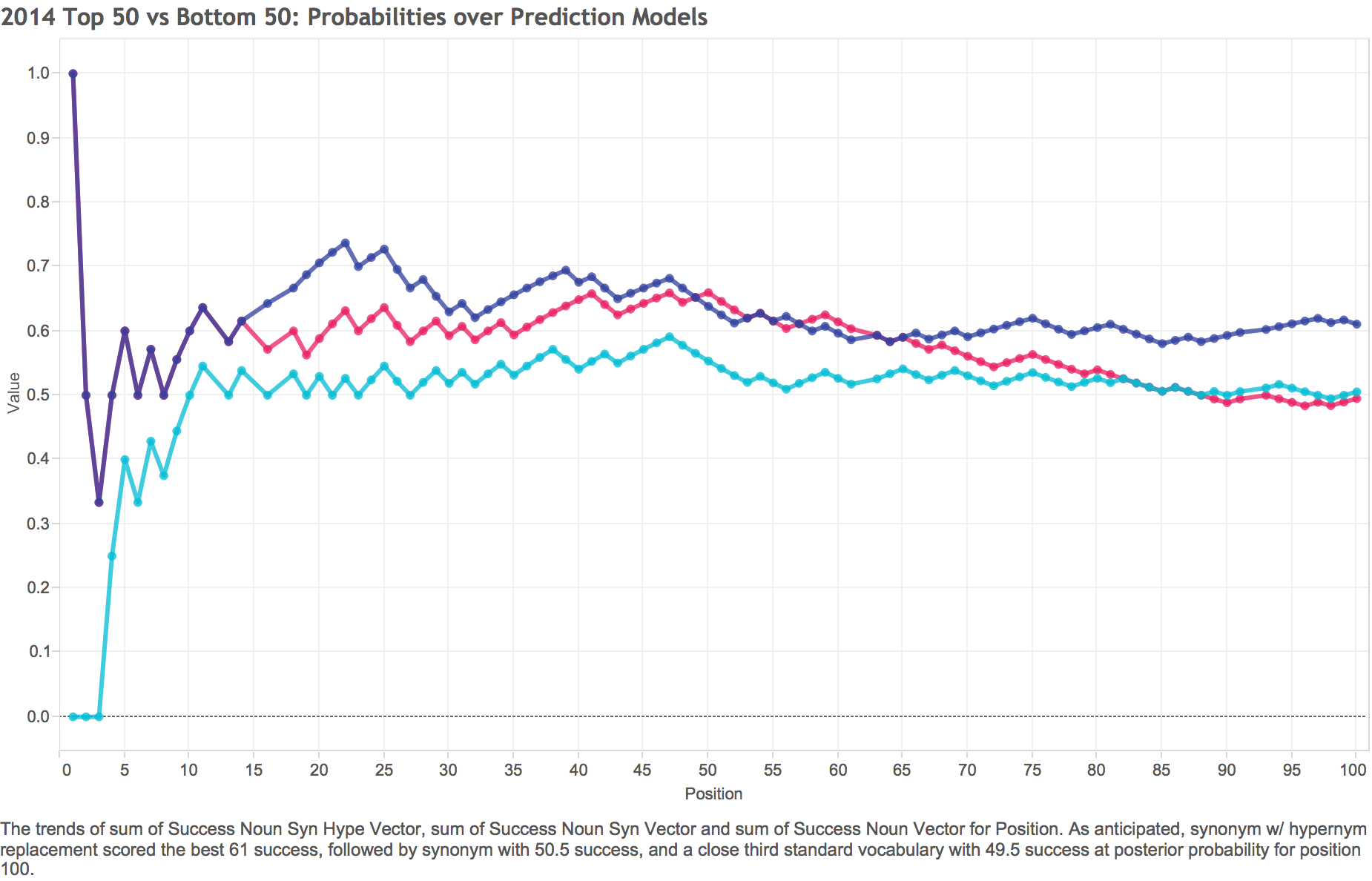

First, we tuned and fit models against noun vectors derived from all 3 Vocabularies — Initial, Shrunken-1, and Shrunken-2 — training on all data from 1970-2013 using the tuned hyperparameters.

Then we predicted ‘Top 50 versus Bottom 50’ exclusively for 2014 using the same models derived from the 3 vocabularies.

- Legend

- Nouns Synonym with Hypernym replacement

- Nouns Synonym

- Nouns

Prediction yielded the results shown in the table below. Note: only 95 of 100 songs in 2014 had populated noun vectors for all three vocabularies.

| Vocabulary |

Posterior Percent |

Hits |

Misses |

| Initial (Nouns) |

49.47 |

47 |

48 |

| Shrunken-1 (Nouns Synonyms) |

50.53 |

48 |

47 |

| Shrunken-2 (Nouns Synonym with Hypernym Replacement) |

61.05 |

58 |

37 |

At least to the degree that lyrics and position are correlated, the results of predicting the top / bottom splits are consistent with our intuitions. Initial vocabulary (vectorized to allow only each unique noun 1x max per song) was the least performant, slightly improved upon by model fit to Shrunken-1 (nouns with synonym replacement, also vectorized using same reduction rules as Initial), then a significant performance gain realized by Shrunken-2 noun vocabulary (synonyms with hypernym replacement, vectorized using same rules). Shrunken-2 correctly predicted 58 of 95 non-empty lyrics in 2014.

Phrase to Genre Prediction

Our final analytical technique uses a classifier to predict the genre that an ad-hoc, snippet of text is most likely to have come from.

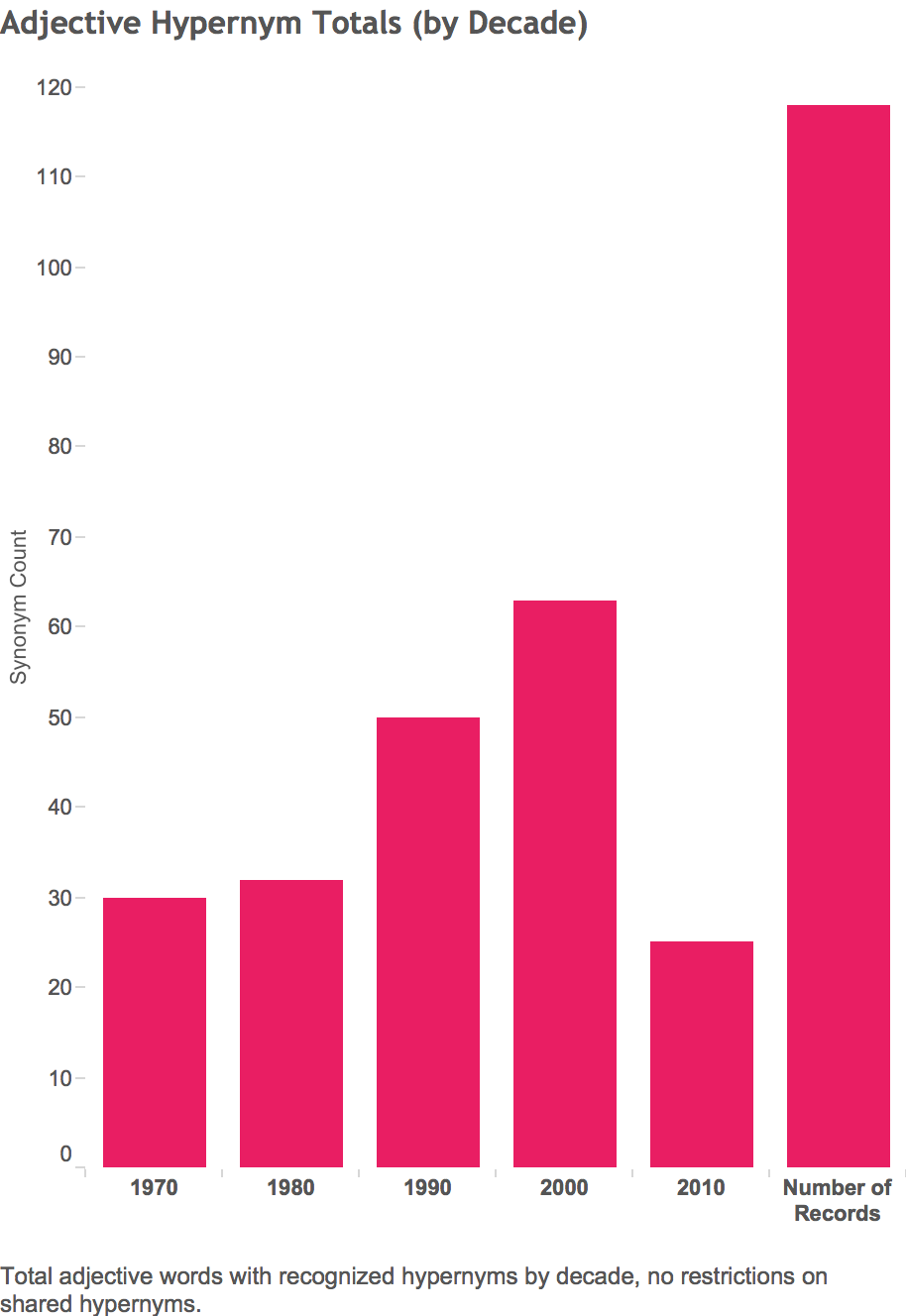

For this we use the Natural Language Tool Kit NLTK Python library and its Positive Naive Bayes Classifier module. We split the data into a training set and a testing set, and for each of the top 15 genres constructed a classifier using songs identified with the genre as the positive examples, and all other songs in the training set as negative examples.

For each included genre, a prior probability is calculated by calculating the relative frequency of songs within that genre in the training set multiplied by 0.097, the number of genres divided by the total number of songs. To see whether the choice of prior influences the quality of the model's predictions, the process is run with several other values for the prior, which are uniform and arbitrarily set. In the table, the "v' stands for a variable prior based on the genre-specific calculation; the others are uniform, meaning the same prior was used regardless of the relative frequency of songs in the relevant genre.

|

0.5 |

0.2 |

0.3 |

0.1 |

0.05 |

0.01 |

0.02 |

0.097 |

| true positive: |

1102 |

385 |

642 |

221 |

152 |

49 |

65 |

106 |

| false positive: |

6370 |

1837 |

3199 |

873 |

330 |

73 |

127 |

169 |

| false negative: |

496 |

1234 |

1003 |

1449 |

1492 |

1625 |

1636 |

1511 |

| true negative: |

4527 |

9039 |

7651 |

9952 |

10521 |

10748 |

10667 |

10709 |

| Right/Total |

0.450 |

0.754 |

0.663 |

0.814 |

0.854 |

0.854 |

0.858 |

0.865 |

Results

Total Positive: 1598

Total negative: 10897

Pct Positive: 0.128

These results indicate that the data are highly unbalanced in the negative direction, and indeed a simple baseline that always predicts negative would have a success rate comparable to the best prior's success rate, which was the variable prior based on the genre's overall prevalence.

Inspired by the adage that "all models are wrong, but some models are are useful," we resort to the obvious deployment of this battery of classifiers: Can it match arbitrarily chosen text to the genre from which it would come? These results suggest the answer is a qualified yes.

| Input | Response |

|---|

| 'how about we dance first' |

Dance Music |

| 'beat the bitch with a bat' |

Hip hop Music |

| 'you know i love you forever and ever' |

Soft Rock |

| 'gun shoot baby' |

Hard Rock |

| 'gun shoot' |

Hard Rock |

| 'woman left me, took my truck' |

Country Music |

To add your own words or learn more about our approach, see the

Positive Naive Bayes Classifier notebook.